Apache Spark: All about Serialization

Overview of How You Can Tune Your Spark Jobs to Improve Performance

In distributed systems, data transfer over the network is the most common task. If this is not handled efficiently, you may end up facing numerous problems, like high memory usage, network bottlenecks, and performance issues.

Serialization plays an important role in the performance of any distributed application.

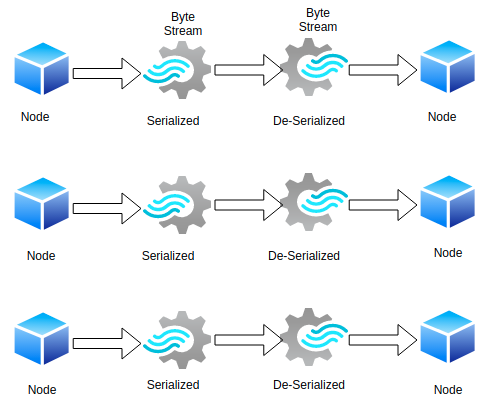

Serialization refers to converting objects into a stream of bytes and vice-versa (de-serialization) in an optimal way to transfer it over nodes of network or store it in a file/memory buffer.

Spark provides two serialization libraries and modes are supported and configured through spark.serializer property.

- Java serialization (default)

Java serialization is the default serialization that is used by Spark when we spin up the driver. Spark serializes objects using Java’s ObjectOutputStream framework. The serialization of a class is enabled by the class implementing the java.io.Serializable interface. Classes that do not implement this interface will not have any of their states serialized or de-serialized. All subtypes of a serialized class are themselves serialized.

A class is never serialized, only the object of a class is serialized.

Java serialization is slow and leads to large serialized formats for many classes. We can fine-tune the performance by extending java.io.Externalizable.

- Kryo serialization (recommended by Spark)

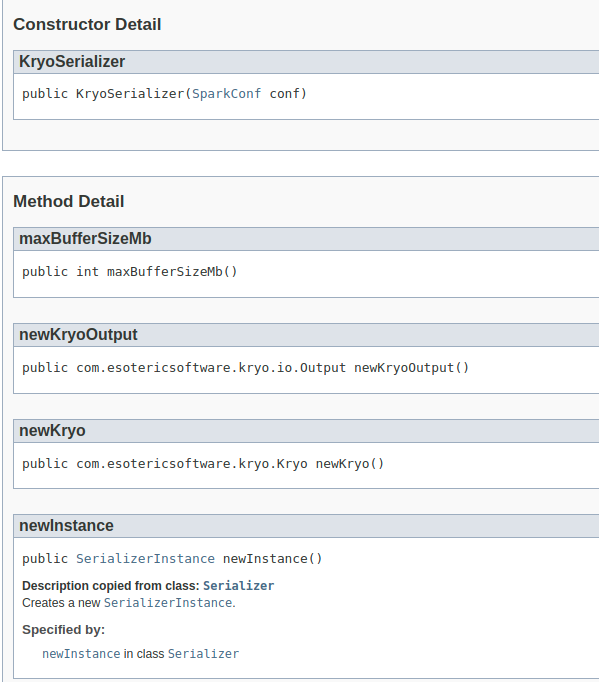

public class KryoSerializer

extends Serializerimplements Logging, java.io.Serializable

Kryo is a Java serialization framework that focuses on speed, efficiency, and a user-friendly API.

Kryo has less memory footprint, which becomes very important when you are shuffling and caching a large amount of data. However, it is not natively supported to serialize to the disk. Both methods, saveAsObjectFile on RDD and objectFile on SparkContext support Java serialization only.

Kryo is not the default because of the custom registration and manual configuration requirement.

Default Serializer

When Kryo serializes an object, it creates an instance of a previously registered Serializer class to do the conversion to bytes. Default serializers can be used without any setup on our part.

Custom Serializer

For more control over the serialization process, Kryo provides two options. We can write our own Serializer class and register it with Kryo or let the class handle the serialization by itself.

Let's see how we can set up Kryo to use in our application.

val conf = new SparkConf()

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.set("spark.kryoserializer.buffer.mb","24")

val sc = new SparkContext(conf)val sc = new SparkContext(conf)val spark = SparkSession.builder().appName(“KryoSerializerExample”)

.config(someConfig) .config(“spark.serializer”, “org.apache.spark.serializer.KryoSerializer”) .config(“spark.kryoserializer.buffer”, “1024k”) .config(“spark.kryoserializer.buffer.max”, “1024m”) .config(“spark.kryo.registrationRequired”, “true”)

.getOrCreate }

The buffer size is used to hold the largest object you will serialize. It should be large enough for optimal performance.

KryoSerializer is a helper class provided by Spark to deal with Kryo. We create a single instance of KryoSerializer that configures the required buffer sizes provided in the configuration.

Finally, you can follow these guidelines by Databricks to avoid serialization issues:

- Make the object/class serializable.

- Declare the instance within the lambda function.

- Declare functions inside an object as much as possible.

- Redefine variables provided to class constructors inside functions.

Resources:

https://spark.apache.org/docs/latest/tuning.html

https://github.com/EsotericSoftware/kryo

The world’s fastest cloud data warehouse:

When designing analytics experiences which are consumed by customers in production, even the smallest delays in query response times become critical. Learn how to achieve sub-second performance over TBs of data with Firebolt.